Three Dimensional AI-Segmentation of Synthetic Filter Media

Abstract

In recent years the usage of artificial intelligence (AI) tools in image processing and analysis has gained significant interest and undergone major improvements. Several techniques, including neural networks, have been used to manipulate and analyze fiber-based images, which in the past required intensive usage of image filters. MANN+HUMMEL has gained significant expertise in the microstructure characterization of fiber-based filter media within the last years [1].

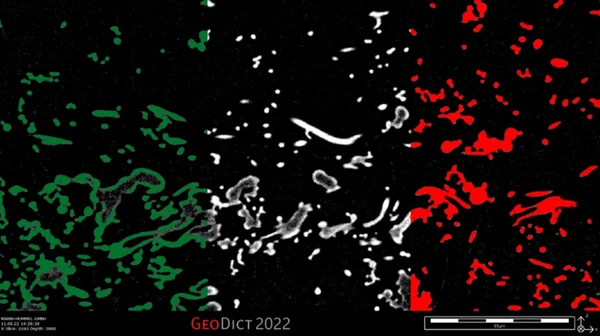

Mainly manual and OTSU-based threshold methods are being used nowadays to segment the µCT scans into voxel meshes suitable for microstructure characterization and microstructure simulation. Often, these µCT scans pose additional challenges. For example: for filter media with multiple fiber materials, the lower-density fiber material is often harder to distinguish from background noise than the higher-density fiber material, represented by higher brightness values. In addition, high-resolution µCT scans (resolution < 2 µm/Vox) frequently exhibit artifacts where the fiber core is represented with lower brightness values than the fiber surface.

This effect is particularly pronounced for so-called shots in meltblown fiber media (Figure 1), i.e., irregular and clumped material accumulations formed by fibers melting together in the manufacturing process. The resulting segmentation leads to hollow fibers and shots that make the voxel mesh unsuitable for microstructure characterization and simulation. Following the segmentation, it is possible to close the hollow fiber structure with image processing algorithms for standard hollow fibers with round cross-sections. However, these image processing algorithms reach their limits and lead to strongly deviating material distributions for more complex microstructures with hollow shots, hollow fibers with irregular cross-sections, or filter media with multiple fiber types.

A solution for these problems may lie in the promising AI-segmentation [2]. In the context of method development and innovative material analysis, the potential of this still relatively young technology, to segment problematic µCT-scans of complex fiber structures realistically, was tested and assessed on various use cases. First, parts of the µCT-scan are sparsely labeled manually. This labeled data is then used to train a 3D-UNET [3] in the simulation software GeoDict. 3D-UNET is a fully convolutional deep neural network, offering advantages over 2D-UNET approaches by incorporating the 3D context of the image. Finally, the trained neural network is applied to the full µCT-scan to obtain the final segmentation.

This presentation compares the results of AI-Segmentation on different microstructures with the segmentation based on OTSU thresholding and morphological operations. Visual comparison of the resulting voxel meshes of both methods with the µCT scan, as well as validation of the resulting microstructure characterization and microstructure simulation by material testing and simulation using the FlowDict module of GeoDict, are discussed and used to make the applicability of the new method comprehensible.

References

[1] Gose, T., Kilian, A., Banzhaf, H., Keller, F., Bernewitz, R., 2019. “Augmented filter media development by virtual prototype optimization“, FILTECH 2019 – F3 - Advanced Filter Media Developments and Manufacturing Methods, Cologne Germany 2019

[2] Arganda-Carreras I., Kaynig V., Rueden C., Eliceiri K., Schindelin J., Cardona A., Seung S., “Trainable Weka Segmentation: a machine learning tool for microscopy pixel classification”, Bioinformatics, Volume 33, Issue 15, 01 August 2017, Pages 2424–2426

[3] Çiçek, Ö., Abdulkadir, A., Lienkamp, S., Brox, T., Ronneberger, O, “3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation“, Medical Image Computing and Computer-Assisted Intervention -- MICCAI 2016